Architecting TDD for Services

Apply test-driven development at the API level to improve and streamline your testing process. Build a test-driven architecture that embraces the process from the start.

Join the DZone community and get the full member experience.

Join For FreeBeyond Unit Testing

Test-driven development (TDD) is a well-regarded technique for an improved development process, whether developing new code or fixing bugs. First, write a test that fails, then get it to work minimally, then get it to work well; rinse and repeat. The process keeps the focus on value-added work and leverages the test process as a challenge to improving the design being tested rather than only verifying its behavior. This, in turn, also improves the quality of your tests, which become a more valued part of the overall process rather than a grudgingly necessary afterthought.

The common discourse on TDD revolves around testing relatively small, in-process units, often just a single class. That works great, but what about the larger 'deliverable' units? When writing a microservice, it's the services that are of primary concern, while the various smaller implementation constructs are simply enablers for that goal. Testing of services is often thought of as outside the scope of a developer working within a single codebase. Such tests are often managed separately, perhaps by a separate team, using different tools and languages. This often makes such tests opaque and of lower quality and adds inefficiencies by requiring a commit/deploy as well as coordination with a separate team.

This article explores how to minimize those drawbacks with test-driven development (TDD) principles applied at the service level. It addresses the corollary that such tests would naturally overlap with other API-level tests, such as integration tests, by progressively leveraging the same set of tests for multiple purposes. This can also be framed as a practical guide to shift-left testing from a design as well as implementation perspective.

Service Contract Tests

A Service Contract Test (SCT) is a functional test against a service API (black box) rather than the internal implementation mechanisms behind it (white box). In their purest form, SCTs do not include subversive mechanisms such as peeking into a database to verify results or rote comparisons against hard-coded JSON blobs. Even when run wholly within the same process, SCTs can loop back to localhost against an embedded HTTP server such as that available in Spring Boot. By limiting access through APIs in this manner, SCTs are agnostic as to whether the mechanisms behind the APIs are contained in the same or a different process(es), while all aspects of serialization/deserialization can be tested even in the simplest test configuration.

The general structure of an SCT is:

- Establish a starting state (preferring to keep tests self-contained)

- One or more service calls (e.g., testing stateful transitions of updates followed by reads)

- Deep verification of the structural consistency and expected behavior of the results from each call and across multiple calls

Because of the level they operate, SCTs may appear to be more like traditional integration tests (inter-process, involving coordination across external dependencies) than unit tests (intra-process operating wholly within a process space), but there are important differences. Traditional integration test codebases might be separated physically (separate repositories), by ownership (different teams), by implementation (different language and frameworks), by granularity (service vs. method focus), and by level of abstraction. These aspects can lead to costly communication overhead, and the lack of observability between such codebases can lead to redundancies, gaps, or problems tracking how those separately-versioned artifacts relate to each other.

With the approach described herein, SCTs can operate at both levels, inter-process for integration-test level comprehensiveness as well as intra-process as part of the fast edit-compile-test cycle during development. By implication, SCTs operating at both levels

- Co-exist in the development codebase, which ensures that committed code and tests are always in lockstep

- Are defined using a uniform language and framework(s), which lowers the barriers to shared understanding and reduces communication overhead

- Reduce redundancy by enabling each test to serve multiple purposes

- Enable testers and developers to leverage each other’s work or even (depending on your process) remove the need for the dev/tester role distinction to exist in the first place

Faking Real Challenges

The distinguishing challenge to testing at the service level is the scope. A single service invocation can wind through many code paths across many classes and include interactions with external services and databases. While mocks are often used in unit tests to isolate the unit under test from its collaborators, they have downsides that become more pronounced when testing services. The collaborators at the service testing level are the external services and databases, which, while fewer in number than internal collaboration points, are often more complex. Mocks do not possess the attributes of good programming abstractions that drive modern language design; there is no abstraction, no encapsulation, and no cohesiveness. They simply exist in the context of a test as an assemblage of specific replies to specific method invocations. When testing services, those external collaboration points also tend to be called repeatedly across different tests. As mocks require a precise understanding and replication of collaborator requests/responses that are not even in your control, it is cumbersome to replicate and manage that malleable know-how across all your tests.

A more suitable service-level alternative to mocks is fakes, which are an alternative form of test double. A fake object provides a working, stateful implementation of its interface with implementation shortcuts, making it not suitable for production. A fake, for example, may lack actual persistence while otherwise providing a fully (or mostly, as deemed necessary for testing purposes) functionally consistent representation of its 'real' counterpart. While mocks are told how to respond (when you see exactly this, do exactly that), fakes know themselves how to behave (according to their interface contract). Since we can make use of the full range of available programming constructs, such as classes, when building fakes, it is more natural to share them across tests as they encapsulate the complexities of external integration points that need not then be copied/pasted throughout your tests. While the unconstrained versatility of mocks does, at times, have its advantages, the inherent coherence, and shareability of fakes make them appealing as the primary implementation vehicle for the complexity behind SCTs.

Alternately Configured Tests (ACTs)

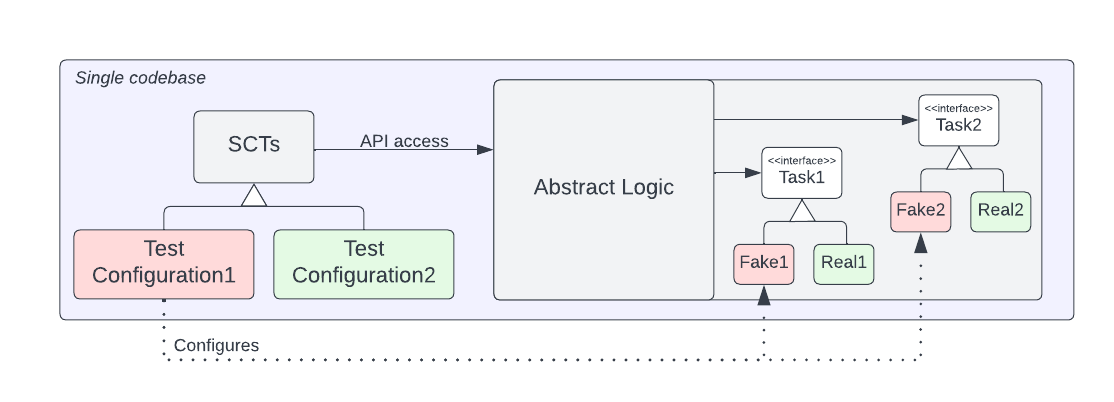

Being restricted to an appropriately high level of API abstraction, SCTs can be agnostic about whether fake or real integrations are running underneath. The same set of service contract tests can be run with either set. If the integrated entities, here referred to as task objects (because they often can be run in parallel as exemplified here), are written without assuming particular implementations of other task objects (in accordance with the "L" and "D" principles in SOLID), then different combinations of task implementations can be applied for any purpose. One configuration can run all fakes, another with fakes mixed with real, and another with all real.

These Alternately Configured Tests (ACTs) suggest a process, starting with all fakes and moving to all real, possibly with intermediate points of mixing and matching. TDD begins in a walled-off garden with the 'all fakes' configuration, where there is no dependence on external data configurations and which runs fast because it is operating in process. Once all SCTs pass in that test configuration, subsequent configurations are run, each further verifying functionality while having only to focus on the changed elements with respect to the previous working test configuration. The last step is to configure as many “real” task implementations as required to match the intended level of integration testing.

ACTs exist when there are at least two test configurations (color code red and green in the diagram above). This is often all that is needed, but at times, it can be useful in order to provide a more incremental sequence from the simplest to the most complex configuration. Intermediate test configurations might be a mixture of fake and real or semi-real task implementations that hit in-memory or containerized implementations of external integration points.

Balancing SCTs and Unit Testing

Relying on unit tests alone for test coverage of classes with multiple collaborators can be difficult because you're operating at several levels removed from the end result. Coverage tools tell you where there are untried code paths, but are those code paths important, do they have more or less no impact, and are they even executed at all? High test coverage does not necessarily equal confidence-engendering test coverage, which is the real goal.

SCTs, in contrast, are by definition always relevant to and important for the purpose of writing services. Unit tests focus on the correctness of classes, while SCTs focus on the correctness of your API.

This focus necessarily drives deep thinking about the semantics of your API, which in turn can drive deep thinking about the purpose of your class structure and how the individual parts contribute to the overall result. This has a big impact on the ability to evolve and change: tests against implementation artifacts must be changed when the implementation changes, while tests against services must change when there is a functional service-level change. While there are change scenarios that favor either case, refactoring freedom is often regarded as paramount from an agile perspective. Tests encourage refactoring when you have confidence that they will catch errors introduced by refactoring, but tests can also discourage refactoring to the extent that refactoring results in excessive test rework. Testing at the highest possible level of abstraction makes tests more stable while refactoring.

Written at the appropriate level of abstraction, the accessibility of SCTs to a wider community (quality engineers, API consumers) also increases. The best way to understand a system is often through its tests; since those tests are expressed in the same API used by its consumers, they can not only read them but also possibly contribute to them in the spirit of Consumer Driven Contracts. Unit tests, on the other hand, are accessible only to those with deep familiarity with the implementation.

Despite these differences, it is not a question of SCTs vs. unit tests, one excluding the other. They each have their purpose; there is a balance between them. SCTs, even in a test configuration with all fakes, can often achieve most of the required code coverage, while unit testing can fill in the gaps. SCTs also do not preclude the benefits of unit testing with TDD for classes with minimal collaborators and well-defined contracts. SCTs can significantly reduce the volume of unit tests against classes without those characteristics. The combination is synergistic.

SCT Data Setup

To fulfill its purpose, every test must work against a known state. This can be a more challenging problem for service tests than for unit tests since those external integration points are outside of the codebase. Traditional integration tests sometimes handle data setup through an out-of-band process, such as database seeding with automated or manual scripts. This makes tests difficult to understand without having to hunt down that external state or external processes and is subject to breaking at any time through circumstances outside your control. If updates are involved, care must be taken to reset or restore the state at the test start or end. If multiple users happen to run the tests at the same time, care must be taken to avoid update conflicts.

A better approach tests that independently set up (and possibly tear down) their own non-conflicting (with other users) target state. For example, an SCT that tests the filtered retrieval of orders would first create an order with a unique ID and with field values set to the test's expectations before attempting to filter on it. Self-contained tests avoid the pitfalls of shared, separately controlled states and are much easier to read as well.

Of course, direct data setup is not always directly possible since a given external service might not provide the mutator operations needed for your test setup. There are several ways to handle this:

- Add testing-only mutator operations. These might even go to a completely different service that isn't otherwise required for production execution.

- Provide a mixed fake/real test configuration using fakes for the update-constrained external service(s), then employ a mechanism to skip such tests for test configurations where those fake tasks are not active. This at least tests the real versions of other tasks.

- Externally pre-populated data can still be employed with SCTs and can still be run with fakes, provided those fakes expose equivalent results. For tests whose purpose is not actually validating updates (i.e., updates are only needed for test setup), this at least avoids any conflicts with multiple simultaneous test executions.

Providing Early Working Services

A test-filtering mechanism can be employed to only run tests against select test configurations. For example, a given SCT may initially work only against fakes but not against other test configurations. That restricted SCT can be checked into your code repository, even though it is not yet working across all test configurations. This orients toward smaller commits and can be useful for handing off work between team members who would then make that test work under more complex configurations. Done right, the follow-on work need only be focused on implementing the real task that doesn’t break the already-working SCTs.

This benefit can be extended to API consumers. Fakes can serve to provide early, functionally rich implementations of services without those consumers having to wait for a complete solution. Real-task implementations can be incrementally introduced with little or no consumer code changes.

Running Remote

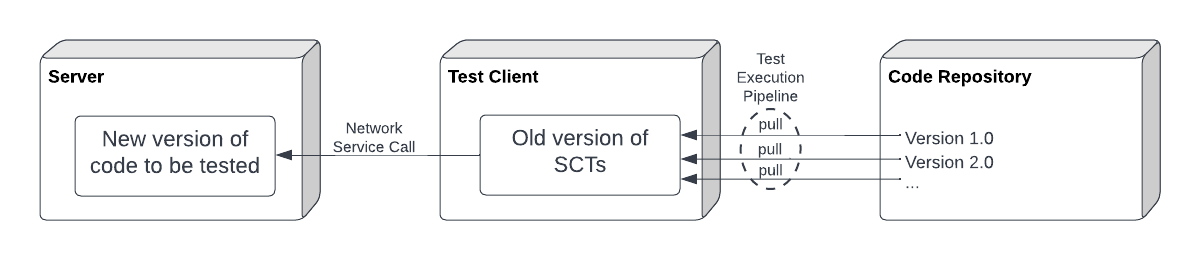

Because SCTs are embedded in the same executable space as your service code under test, all can run in the same process. This is beneficial for the initial design phases, including TDD, and running on the same machine provides a simple way for execution, even at the integration test level. Beyond that, it can sometimes be useful to run both on different machines. This might be done, for example, to bring up a test client against a fully integrated running system in staging or production, perhaps also for load/stress testing.

An additional use case is for testing backward compatibility. A test client with a previous version of SCTs can be brought up separately from and run against the newer versioned server in order to verify that the older tests still run as expected. Within an automated build/test pipeline, several versions can be managed this way:

Summary

Service Contract Tests (SCTs) are tests against services. Alternatively, Configured Tests (ACTs) define multiple test configurations that each provide a different task implementation set. A single set of SCTs can be run against any test configuration. Even though SCT can be run with a test configuration that is entirely in process, the flexibility offered by ACTs distinguishes them from traditional unit/component tests. SCTs and unit tests complement one another.

With this approach, Test Driven Development (SCT) can be applied to service development. This begins by creating SCTs against the simplest possible in-process test configuration, which is usually also the fastest to run. Once those tests have passed, they can be run against more complex configurations and ultimately against a test configuration of fully 'real' task implementations to achieve the traditional goals of integration or end-to-end testing. Leveraging the same set of SCTs across all configurations supports an incremental development process and yields great economies of scale.

Opinions expressed by DZone contributors are their own.

Comments