Fueling Innovation: Key Tools for Enhancing Generative AI in Data Lake Houses

With the advent of LLMs and Generative AI apps, data is the central part of the entire ecosystem; in this article, we will discuss tools to support AI apps on top of Data Lake Houses.

Join the DZone community and get the full member experience.

Join For FreeThe coming wave of generative AI will be more revolutionary than any technology innovation that's come before in our lifetime, or maybe any lifetime. - Marc Benioff, CEO of Salesforce

In today's data-driven landscape, organizations are constantly seeking innovative ways to derive value from their vast and ever-expanding datasets. Data Lakes have emerged as a cornerstone of modern data architecture, providing a scalable and flexible foundation for storing and managing diverse data types. Simultaneously, Generative Artificial Intelligence (AI) has been making waves, enabling machines to mimic human creativity and generate content autonomously.

The convergence of Data Lake Houses and Generative AI opens up exciting possibilities for businesses and developers alike. It empowers them to harness the full potential of their data resources by creating AI-driven applications that generate content, insights, and solutions dynamically. However, navigating this dynamic landscape requires the right set of tools and strategies.

In this blog, We'll explore the essential tools and techniques that empower developers and data scientists to leverage the synergy between these two transformative technologies.

Below are basic capabilities and tools needed on top of your data lake to support Generative AI apps:

Vector Database

Grounding Large Language Models (LLMs) with generative AI using vector search is a cutting-edge approach aimed at mitigating one of the most significant challenges in AI-driven content generation: hallucinations. LLMs, such as GPT, are remarkable for their ability to generate human-like text, but they can occasionally produce information that is factually incorrect or misleading. This issue, known as hallucination, arises because LLMs generate content based on patterns and associations learned from vast text corpora, sometimes without a factual basis.

Vector search, a powerful technique rooted in machine learning and information retrieval, plays a pivotal role in grounding LLMs by aligning generated content with reliable sources, real-world knowledge, and factual accuracy.

Auto ML

AutoML helps you automatically apply machine learning to a dataset. You provide the dataset and identify the prediction target while AutoML prepares the dataset for model training. AutoML then performs and records a set of trials that create, tune, and evaluate multiple models.

You can further streamline the process by integrating AutoML platforms like Google AutoML or Azure AutoML, which can automate the process of training and tuning AI models, reducing the need for extensive manual configuration.

Model Serving

Model serving is the process of making a trained model available to users so that they can make predictions on new data. In the context of generative AI apps on data lake houses, model serving plays a critical role in enabling users to generate creative text formats, translate languages, and answer questions in an informative way.

Here are some of the key benefits of using model serving in generative AI apps on data lake houses:

- Scalability: Model serving systems can be scaled to handle any volume of traffic. This is important for generative AI apps, which can be very popular and generate a lot of traffic.

- Reliability: Model serving systems are designed to be highly reliable. This is important for generative AI apps, which need to be available to users 24/7.

- Security: Model serving systems can be configured to be very secure. This is important for generative AI apps, which may be processing sensitive data.

At the same time, the costs of in-house model serving can be prohibitive for smaller companies. This is why many smaller companies choose to outsource their model serving needs to a third-party provider.

LLM Gateway

LLM Gateway is a system that makes it easier for people to use different large language models (LLMs) from different providers. It does this by providing a single interface for interacting with all of the different LLMs and by encapsulating best practices for using them. It also manages data by tracking what data is sent to and received from the LLMs and by running PII scrubbing heuristics on the data before it is sent.

In other words, LLM Gateway is a one-stop shop for using LLMs. It makes it easy to get started with LLMs, and it helps people to use them safely and efficiently.

LLM gateways serve below purposes:

- Simplify the process of integrating these powerful language models into various applications.

- Provide user-friendly APIs and SDKs, reducing the barrier to entry for leveraging LLMs.

- Enable prediction caching to track repeated prompts.

- Rate limiting to manage costs.

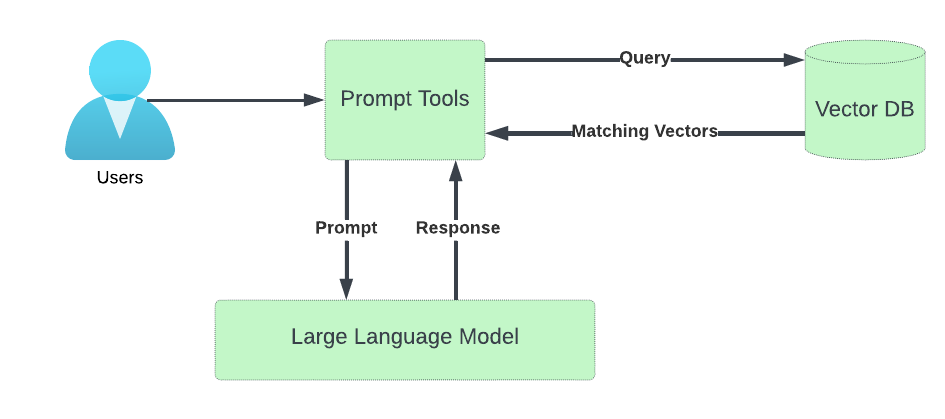

Prompt Tools

Prompt tools can help you write better prompts for generative AI tools, which can lead to improved responses in a number of ways:

- Reduced ambiguity: Prompt tools can help you to communicate your requests more clearly and precisely, which can help to reduce ambiguity in the AI's responses.

- Consistent tone and style: Prompt tools can help you to specify the tone and style of the desired output, ensuring that generated content is consistent and on-brand.

- Mitigated bias: Prompt tools can help you to instruct the AI to avoid sensitive topics or adhere to ethical guidelines, which can help to mitigate bias and promote fairness.

- Improved relevance: Prompt tools can help you to set the context and goals for the AI, ensuring that generated content stays on-topic and relevant.

Here are some specific examples of how prompt tools can be used to address the challenges you mentioned:

- Avoiding ambiguous or unintended responses: Instead of simply saying, "Write me a blog post about artificial intelligence," you could use a prompt tool to generate a more specific prompt, such as "Write a 1000-word blog post about the different types of artificial intelligence and their potential applications."

- Ensuring consistent tones and styles: If you are writing an email to clients, you can use a prompt tool to specify a formal and informative tone. If you are writing a creative piece, you can use a prompt tool to specify a more playful or experimental tone.

- Producing unbiased and politically correct content: If you are writing about a sensitive topic, such as race or religion, you can use a prompt tool to instruct the AI to avoid certain subjects or viewpoints. You can also use a prompt tool to remind the AI to adhere to your organization's ethical guidelines.

- Staying on-topic and generating relevant information: If you are asking the AI to generate a report on a specific topic, you can use a prompt tool to provide the AI with the necessary context and goals. This will help the AI to stay on-topic and generate relevant information.

Overall, prompt tools are a valuable tool for anyone who uses generative AI tools. By using prompt tools, you can write better prompts and get the most out of your generative AI tools.

Monitoring

Generative AI models have transformed various industries by enabling machines to generate human-like text, images, and more. When integrated with Lake Houses, these models become even more powerful, leveraging vast amounts of data to generate creative content. However, monitoring such models is crucial to ensure their performance, reliability, and ethical use. Here are some monitoring tools and practices tailored for Generative AI on top of Lake Houses:

- Model Performance Metrics

- Data Quality and Distribution

- Cost Monitoring

- Anomaly Detection

Conclusion

In conclusion, the convergence of Data Lake Houses and Generative AI marks a groundbreaking era in data-driven innovation. These transformative technologies, when equipped with the right tools and capabilities, empower organizations to unlock the full potential of their data resources. Vector databases and grounding LLMs with vector search address the challenge of hallucinations, ensuring content accuracy. AutoML streamlines machine learning model deployment, while LLM gateways simplify integration. Prompt tools enable clear communication with AI models, mitigating ambiguity and bias. Robust monitoring ensures model performance and ethical use.

Opinions expressed by DZone contributors are their own.

Comments