GPT-J: GPT-3 Democratized

GPT-J is the open source cousin of GPT-3 that everyone can use. The open source transformer is all about democratizing transformers and with 6b parameters.

Join the DZone community and get the full member experience.

Join For FreeThere’s quite a similarity between cloud native and Artificial Intelligence. Things here move pretty fast, and if you are the curious kind, you would always be excited to explore new and exciting tech. It’s kind of bad as sometimes you get distracted by some new technology so much that you skip your work.

I am guilty of that!

Anyway, let’s talk about such exciting development in our cousin field. It’s called GPT-J, “the open source cousin of GPT-3 everyone can use”.

The Leap: GPT-3

What’s GPT-3?

GPT-3 is the 3rd generation of GPT (Generative Pre-trained Transformer) language models with 175 billion parameters. It’s pretty powerful in comparison to GPT-2 where only 1.5 billion parameters were used. If you compare GPT-3 to all the remaining models, the one coming close to GPT-3 is Microsoft’s Turning NLG with just 17 billion parameters.

Now, you get some idea what the hype is all about since the last few years. The hype is about the deep learning model trained on massive text datasets with hundreds of billions of words which is capable of producing human-like text with 10x the nearest competitors' parameters.

Uses of GPT-3

Now, as it’s a language model, the applications are vast. We, too, got access and are using GPT-3 in some of our blog posts, and it’s pretty good, apart from producing a slight pale tone.

Read what's cloud native by GPT-3 here.

The list goes on, and GPT-3 enters new domains such as code completion/ generation. GitHub launched its Copilot, and we describe it as:

GitHub Copilot is an AI-assisted pair programmer that helps you write code more quickly and efficiently. GitHub Copilot extracts context from comments and code and provides quick suggestions for individual lines and entire functions. OpenAI Codex, a new AI system developed by OpenAI, powers GitHub Copilot.

The language model also has features such as chat, tweet classifier, outline creator, keyword extractor, HTML elements generator, and many more applications. Learn more here.

Commercial Uses of GPT-3

Apart from Copilot, Microsoft gave OpenAI a $1 billion investment and gave them exclusive rights to license GPT-3 a year later. Over 300 GPT-3 projects are in the works, according to OpenAI, using a limited-access API. A tool for extracting insights from customer comments, a system that writes emails automatically from bullet points, and never-ending text-based adventure games are among them.

Is GPT-3 Free?

NO, you need to pay, and it’s in beta preview. So, getting access is quite complicated and tiring. You need to wait months.

Is GPT-3 open source?

NO, so all you can do is wait.

Open Source Triumphs: GPT-J

What’s GPT-J?

I have written a blog post previously on how CNCF helps the innovation cycle rolling. It’s a big organization with hundreds of sponsors, and they are democratizing cutting-edge tech.

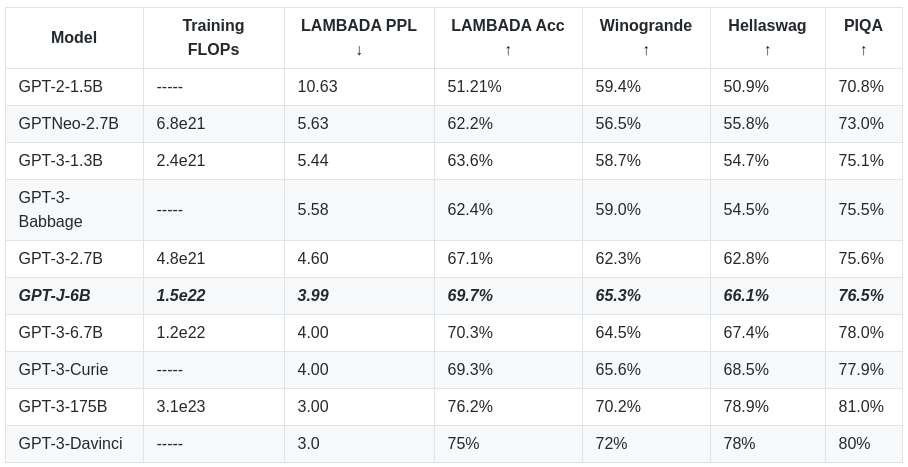

But now, GPT-J is not backed by an organization. It doesn’t have that significant financial backing, so it’s trained on 6 billion parameters, a lot less than GPT-3 but look at the other options available.

The publicly available GPT-2 is trained on 1.5 billion parameters which are significantly less than GPT-J’s 6 billion parameters. Moreover, having so many parameters helps it perform on par with 6.7B GPT-3. So, these factors make it the most accurate publicly available model.

Few Problems With GPT-3

GPT-3 doesn’t have long-term memory. Thus it doesn’t learn from long-term interactions as people do.

Lack of interpretability is another problem that affects large and complex data sets in general. The dataset of GPT-3 is so extensive that its output is difficult to understand or analyze.

The model takes longer to provide predictions since GPT-3 is so huge.

GPT-3 is no exception to the rule that all models are only as good as the data that was used to train them. This paper, for example, shows that anti-Muslim bias exists in GPT-3 and other big language models.

A Problem the GPT-J Goes To Solve

It’s expected that GPT-J can’t solve all of the above problems with limitations. They lack hardware resources. But one thing Eleuther has done well is to try to remove the bias present in GPT-3 in GPT-J.

Eleuther’s dataset is more diversified than GPT-3, and it avoids some sites like Reddit, which are more likely to include questionable content. Eleuther has “gone to tremendous pains over months to select this data set, making sure that it was both well filtered and diversified, and record its faults and biases,” according to Connor Leahy, an independent AI researcher and cofounder of Eleuther.

Also, Eleuther could make developing similar tools like GitHub copilot without access to the GPT-3 API easy. People can create without being limited to API access.

Final Thoughts

Now, talk about any language model, and it will have a considerable amount of weakness, bias, and silly mistakes, which is entirely normal. Work is in progress, and we are not even close to AI’s taking over everything as skeptics imagine. Sure, AI is about to change the world, but we are pretty far from that.

GPT-J is a small step towards democratizing transformers, and GPT-3 is a small step towards human-like language models. Try GPT-J out here.

Happy Learning!

Published at DZone with permission of Hrittik Roy. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments