Redefining DevOps: The Transformative Power of Containerization

Discover how containerization is revolutionizing software development and deployment in the era of DevOps. Enhanced business agility with this technology.

Join the DZone community and get the full member experience.

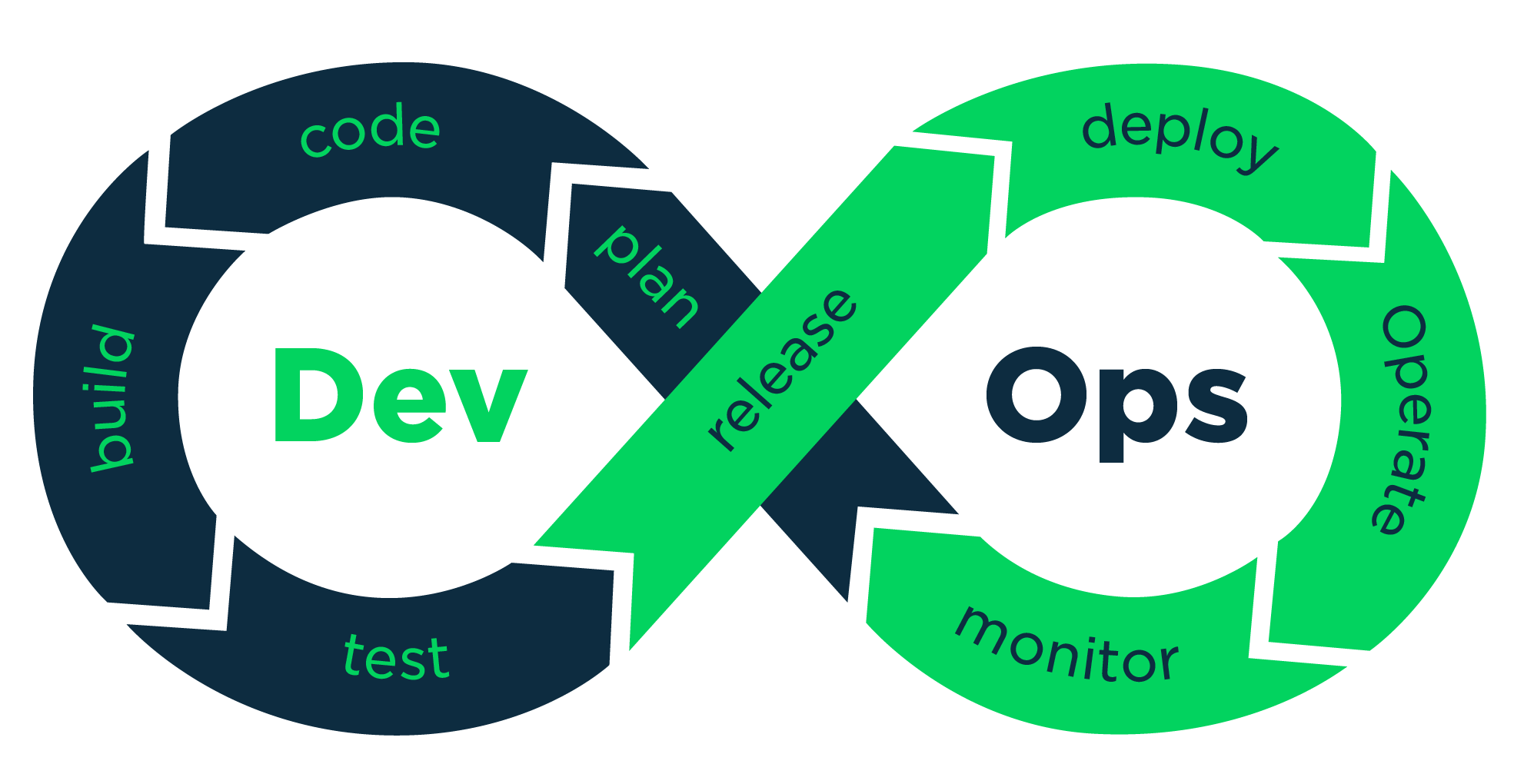

Join For FreeIn the rapidly evolving digital age, DevOps has emerged as a crucial paradigm reshaping the software development landscape. DevOps, a term derived from 'Development' and 'Operations,' integrates these two historically siloed functions into a unified approach focused on shortening the software development life cycle. As a result, DevOps practices promote faster, more reliable software releases and facilitate continuous integration, continuous delivery, and high availability, enhancing business competitiveness and agility.

However, the ceaseless quest for increased efficiency and more robust delivery processes has led us to an innovation that is having a profound impact on the way we develop and deliver software: containerization. Containerization involves encapsulating or packaging up software code and all its dependencies to run uniformly and consistently on any infrastructure. This remarkable technology is not merely an incremental improvement in the DevOps world; it is a significant leap forward, transforming the operational effectiveness of software development and deployment.

This article will delve into the transformative power of containerization within the DevOps context, exploring its benefits, challenges, and its implications for the future of software development.

The Advent of Containerization

With the inception of the cloud era, the way we approach software development has undergone radical shifts. One of the significant breakthroughs accompanying this era is the concept of containerization. As organizations grappled with the complexities of managing multi-environment deployments and addressing compatibility issues across numerous platforms, containerization emerged as a solution.

The Basics

Containerization is a lightweight alternative to full-machine virtualization that involves encapsulating an application in a container with its operating system. It bundles the application with all related configurations, dependencies, libraries, and binaries the software needs to run. This packaged unit, known as a container, ensures that the application will operate consistently across multiple computing environments.

In the simplest terms, containers can be considered boxes, where everything needed to run a piece of software is packed. Each container is an isolated unit, which means it doesn't interfere with other containers and doesn't rely on specific host OS configurations. The inherent isolation and portability of containers make them particularly valuable in a DevOps context where consistency, autonomy, and seamless functionality across varying environments are crucial.

In a nutshell, containerization has revolutionized the concept of "write once, run anywhere," presenting opportunities to develop applications more rapidly, manage upgrades more effectively, and reduce the costs and efforts associated with managing deployments on different platforms. Its significance in the DevOps world is just beginning to be truly understood and harnessed.

Evolution and Acceptance in DevOps

Containerization is not a new concept; it has its roots in the Unix world, where the principles of isolation and segmentation were utilized in technologies such as chroot. However, it was Docker, released in 2013, that brought containerization to the mainstream and sparked a significant change in software development and operations.

Docker made the process of creating, deploying, and running applications through containers simplified and efficient. Its easy-to-use command-line interface, vast open-source community, and the introduction of Dockerfiles for building container images made Docker popular among both developers and operations teams.

The introduction of Docker coincided with the rise of microservices architectures. As organizations moved away from monolithic architectures towards microservices, the need for a technology that could manage these individual services efficiently became apparent. Containers proved to be the perfect fit, providing a way to segment and manage these services independently while maintaining consistency and reducing overhead.

This perfect storm of the rise of microservices and the introduction of Docker led to the rapid adoption of containerization in DevOps practices. DevOps, with its focus on continuous integration and delivery, automation, and collaborative problem-solving, found a powerful tool in containers.

Kubernetes, introduced in 2014, took containerization a step further as it provided a platform for automating deployment, scaling, and management of application containers across clusters of hosts. It brought a new level of power and flexibility to container orchestration, and its integration with DevOps practices has been instrumental in driving the acceptance and success of containerization.

As we continue to evolve and refine our software development and operational practices, containerization has become a mainstay in the DevOps toolset, providing unprecedented levels of efficiency, scalability, and consistency.

The Impact of Containerization on DevOps

Containerization has emerged as a linchpin in the domain of DevOps, helping to streamline processes and enhance productivity. Let's dive deeper into the role of containerization and understand its impact on DevOps through the lens of industry professionals.

Role of Containerization

The primary benefit of containerization, as Huezaifa A, Co-founder of StarlinkZone eloquently puts it, lies in the "ability to bundle an application and its entire runtime environment, capturing all dependencies into one deployable unit." This bundling resolves the common 'works on my machine issue, enabling consistency throughout the development, testing, and production stages. Furthermore, with everything an application needs to run encompassed within the container, developers can focus more on writing great code, knowing that it will run as intended, regardless of the environment.

Containerization also introduces a high degree of portability into the software development process. The bundled application can be moved between different environments, be it a developer's local machine, a test environment, or a production server, without requiring any changes. This portability smoothens the interaction between the development, QA, and operations teams, fostering a more collaborative and efficient DevOps culture.

David Berube of Durable Programming, LLC, points out the utility of containerization in reproducing client environments: "Having Docker involved end-to-end lets us effectively match our development machines to myriad client production environments in a logical and reproducible way." This statement underscores the power of containerization in promoting replicability and predictability across multiple platforms and environments. In addition, it has significantly reduced the time and effort previously required to manage and resolve environment-related issues, thereby accelerating the software development process.

By facilitating consistency and portability, containerization has profoundly influenced the DevOps realm. It enhances the speed and quality of software development and fosters an environment conducive to seamless collaboration between different teams. This inherent alignment with the DevOps philosophy makes containerization an indispensable part of today's software development pipeline.

Microservices and Containerization

Recent years have witnessed a significant shift in software development, moving away from monolithic applications toward a microservices architecture. Microservices architecture involves breaking down an application into a collection of loosely coupled services, each of which can be developed, deployed, and scaled independently. This transition has been significantly facilitated by the advent of containerization.

Huezaifa points out, "With containerization, we've managed to partition monolithic applications into microservices." Containers offer an ideal environment for managing and deploying microservices because each service can be packaged into a separate container with its dependencies. This approach allows each service to run in isolation, preventing cascading failures and enabling independent scaling based on individual service requirements.

By breaking down an application into smaller, manageable parts, containerization not only simplifies understanding and maintaining the codebase but also considerably reduces the scope of potential issues. For instance, a bug in one service does not directly impact others, and any problematic service can be independently updated or rolled back without affecting the rest of the application.

The encapsulation provided by containers also makes them a natural fit for continuous integration/continuous delivery (CI/CD) pipelines, a core component of DevOps practices. Each microservice can be continuously developed, tested, and deployed without interrupting other services, leading to quicker releases and more robust applications.

The symbiotic relationship between microservices and containerization has been instrumental in advancing the DevOps model. With containers, developers can build and deploy microservices with increased speed, agility, and reliability, leading to efficient software delivery processes that respond better to business needs.

The Role of Docker and Kubernetes in Containerization

Docker and Kubernetes have been at the forefront of the containerization revolution, playing pivotal roles in integrating this technology into DevOps processes. They each serve different but complementary functions in managing and orchestrating containers.

Adopting Docker for Streamlined DevOps

Docker has become synonymous with containerization due to its simplicity, usability, and robust container management features. It allows developers to package an application and its environment into a standalone, self-sufficient unit called a Docker container.

The practical implications of Docker for DevOps processes are profound. Berube aptly says, "By focusing on Nix and Docker as our gold standard for testing, development, and production, we can cost-efficiently help clients modernize their development practices without forcing them to adopt proprietary technologies." Docker's platform-agnostic nature has indeed made it possible to create uniform development environments that mirror production, leading to fewer surprises and smoother deployments.

Docker also integrates seamlessly with many popular DevOps tools, making it a versatile solution that fits perfectly into existing workflows. In addition, it helps maintain the consistency of applications across the software delivery lifecycle - from a developer's workstation to the production environment. This consistency significantly reduces the complexity associated with application deployment and maintenance, fostering a more efficient and effective DevOps culture.

Docker's containerization approach has made the development, shipping, and running of applications much more streamlined and straightforward. With Docker, teams can enjoy enhanced control and predictability, leading to higher efficiency and productivity in their DevOps processes.

Leveraging Kubernetes in Containerization

While Docker has revolutionized the creation and deployment of containers, Kubernetes has emerged as the gold standard for managing those containers, especially at scale. Kubernetes is a container orchestration platform that automates the deployment, scaling, and management of containerized applications across server clusters.

Huezaifa sums up the role of Kubernetes succinctly: "Kubernetes, a container orchestration platform, works excellently with Docker, enabling us to automate the deployment, scaling, and management of our applications." Kubernetes can handle hundreds of Docker containers across multiple servers, making it an ideal tool for large, distributed applications.

Kubernetes provides several significant features that enhance the benefits of containerization. For example, it provides automatic bin packing, horizontal scaling, automated rollouts and rollbacks, service discovery, and load balancing. In addition, Kubernetes ensures failover for your applications, meaning if a container or a node fails, Kubernetes can automatically replace it to keep your services running smoothly.

One of the most valuable aspects of Kubernetes in a DevOps context is its ability to maintain the application state over time, regardless of the container lifecycle. It enables DevOps teams to manage services rather than individual containers, thereby facilitating continuous integration/continuous deployment (CI/CD), logging, and monitoring, which are crucial components of successful DevOps practices.

With its ability to manage complex applications at scale, Kubernetes enhances the power of containerization and aligns it with the principles of DevOps. By automating many of the manual processes involved in deploying and scaling containerized applications, Kubernetes has become an essential tool in the DevOps arsenal.

Challenges and Solutions in Implementing Containerization

While containerization provides many benefits and is a powerful tool in the DevOps toolkit, it is not without its challenges. Implementing containerization requires a thoughtful approach, and overcoming its challenges necessitates a solid understanding of the technology and its landscape.

Common Challenges

- Complexity: Implementing containerization, particularly at scale, can be complex. It requires a deep understanding of both the application being containerized and the underlying technology. In addition, understanding how to create Docker images, manage Dockerfiles, or orchestrate containers using Kubernetes can be a steep learning curve for teams new to containerization.

- Security: Containers share the host system's kernel, which can pose a security risk if a container is compromised. Additionally, vulnerabilities in the application or its dependencies bundled within the container can be exploited if not properly managed and updated.

- Networking: Networking in a containerized environment can present complexities. It's necessary to configure communication between containers across different hosts, handle dynamic IP addresses of containers, and manage the exposure of services to the outside world, all of which can be challenging.

- Monitoring and Logging: Traditional monitoring and logging tools might not work effectively in a containerized environment because of the transient nature of containers. As containers are frequently stopped, started, or moved around, tracking their performance and logs can be problematic.

- Data Management: Containers are ephemeral by nature, meaning they can be started or stopped at any time. This can pose problems when dealing with persistent data storage and ensuring data consistency across various containers and sessions.

- Resource Management: Overcommitting resources can cause performance issues, while underutilizing resources can lead to inefficient operations. Striking the right balance requires careful management and understanding of resource allocation in a containerized environment.

Learning Curve and Initial Challenges

The transition to a containerized environment is not a trivial task, especially for teams new to the technology. Understanding and implementing containerization entail an initial learning curve. As Berube indicates, even something as fundamental as Dockerfiles can pose challenges. "While theory states this should not occur, experience indicates that, in practice, Dockerfiles can stop working in time just as other code can - they may very well depend on other files or images which, in time, change."

A Dockerfile is essentially a script that contains instructions on how to build a Docker image. It should ideally be designed to be self-contained and not rely on files or images that can change over time. However, the reality of software development means that dependencies and configurations can and do change, and these changes can impact the reliability of Dockerfiles. Therefore, it requires careful management and version control, just like any other piece of code.

Furthermore, understanding how to manage containers using Kubernetes adds another layer of complexity. Kubernetes is a powerful orchestration tool, but its richness in features and capabilities can be overwhelming for newcomers. Learning to define Kubernetes objects, setting up a cluster, and handling Kubernetes networking are among the initial challenges that a team might face.

It's important to remember that these initial hurdles are not insurmountable. However, with adequate training, solid documentation, and incremental adoption, teams can overcome these challenges and reap the benefits of containerization in their DevOps practices.

Resolving Technical Complexities

Overcoming the technical challenges of implementing containerization requires a combination of creative problem-solving, leveraging the built-in capabilities of the tools, and potentially incorporating third-party solutions. Here are a few strategies to navigate these complexities:

- Handling Persistent Data: As mentioned earlier, containers are ephemeral, and managing persistent data across container restarts can be challenging. However, Huezaifa provides an excellent solution, "We utilized the persistent volumes feature in Kubernetes and also explored third-party solutions to maintain data persistence across container restarts." Persistent Volumes (PV) and Persistent Volume Claims (PVC) in Kubernetes provide a method to declare and manage storage resources that live independently of containers and ensure data survive container restarts.

- Creating Docker Images for Legacy Software: Dockerizing legacy software may seem daunting, especially when existing examples no longer work. However, as Berube suggests, "If you can get the software to compile, you can likely create a Docker image for it - even if the examples for older software may no longer work, you can likely create a solution that does work without too much hassle, assuming you can still find a way to make the software in question compile." Of course, this approach requires a deep understanding of both the software and Docker, but it shows how containerization can be applied even in complex, legacy environments.

- Security and Networking: Leveraging built-in security features and networking policies of containerization tools can resolve many associated risks. For instance, Docker offers security features like user namespaces, seccomp profiles, and capabilities, while Kubernetes provides Network Policies to control traffic flow at the IP address or port level.

- Monitoring and Logging: Embracing container-specific monitoring and logging tools can simplify these tasks. Tools like Prometheus for monitoring and Fluentd or Loki for log aggregation are designed to handle the dynamic nature of containers and provide valuable insights into container performance.

These strategies, when combined with a deep understanding of the containerization landscape and the specific requirements of your application, can help resolve the technical complexities of implementing containerization in your DevOps practices.

Conclusion

Containerization has emerged as a game-changer in the world of software development and DevOps. Its influence and impact cannot be overstated, as it has fundamentally shifted the way we develop, deploy, and manage applications. From creating consistency across environments to facilitating the microservices architecture and improving the speed and reliability of deployments, containerization brings substantial benefits to the DevOps landscape.

Docker and Kubernetes, two leading tools in containerization, have proved instrumental in bringing these benefits to life. Docker's ability to bundle an application and its entire runtime environment into one deployable unit, and Kubernetes' power to manage these containers efficiently at scale, have significantly streamlined DevOps processes.

However, the journey toward adopting containerization isn't without its challenges. The initial learning curve, dealing with complexities related to persistent data, security, networking, and monitoring are hurdles that teams often face. But as we've seen, these challenges can be addressed and overcome through a combination of built-in tool features, third-party solutions, and sound technical strategies.

Looking ahead, the role of containerization in DevOps will only grow more prominent. As the complexity and scale of applications continue to increase, the need for solutions that improve efficiency, consistency, and scalability will also rise. Containerization, with its inherent advantages, is poised to meet these needs and drive the future of DevOps.

By continuing to evolve and adapt to the ever-changing software development landscape, containerization will remain a cornerstone of modern DevOps practices, enabling teams to deliver better software faster and more reliably for years to come.

Opinions expressed by DZone contributors are their own.

Comments