Word Embeddings: Giving Your ChatBot Context for Better Answers

Learn how to build an expert bot using word embeddings and ChatGPT. Leverage the power of word vectors to enhance your chatbot's responses.

Join the DZone community and get the full member experience.

Join For FreeThere is no doubt that OpenAI's ChatGPT is exceptionally intelligent — it has passed the lawyer's bar test, it possesses knowledge akin to a doctor, and some tests have clocked its IQ at 155. However, it tends to fabricate information instead of conceding ignorance. This tendency, coupled with the fact that its knowledge ceases in 2021, poses challenges in building specialized products using the GPT API.

How can we surmount these obstacles? How can we impart new knowledge to a model like GPT-3? My goal is to address these questions by constructing a question-answering bot employing Python, the OpenAI API, and word embeddings.

What I Will Be Building

I intend to create a bot that generates continuous integration pipelines from a prompt, which, as you may know, are formulated with YAML in Semaphore CI/CD.

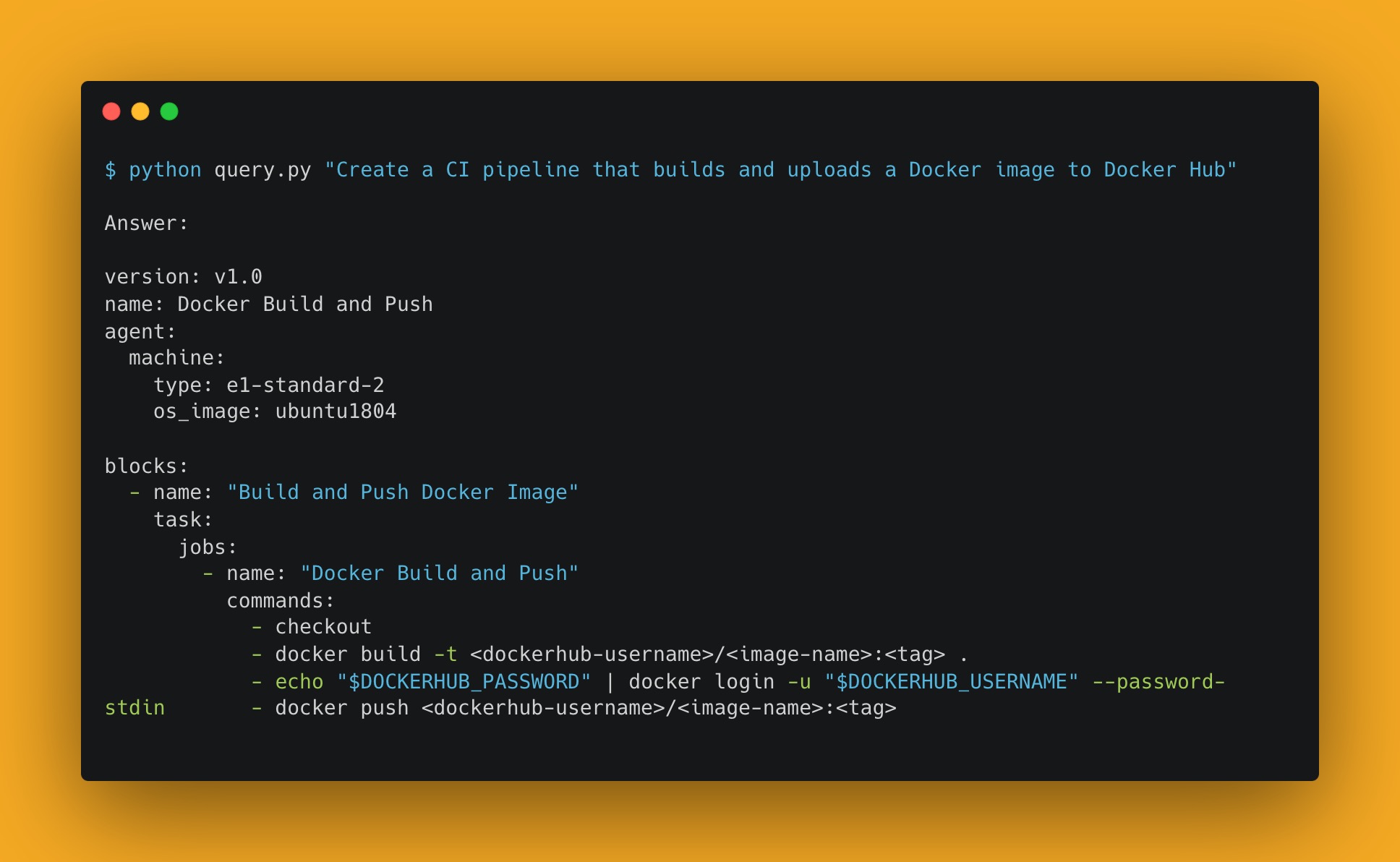

Here is an example of the bot in action:

Screenshot of the running program. On the screen, the command

Screenshot of the running program. On the screen, the command python query.py "Create a CI pipeline that builds and uploads a Docker image to Docker Hub" is executed, and the program prints out YAML corresponding to a CI pipeline that performs the requested action.

In the spirit of projects like DocsGPT, My AskAI, and Libraria, I plan to "teach" the GPT-3 model about Semaphore and how to generate pipeline configuration files. I will achieve this by leveraging the existing documentation.

I will not assume prior knowledge of bot building and will maintain clean code so that you can adapt it to your requirements.

Prerequisites

You do not need experience in coding a bot or knowledge of neural networks to follow this tutorial. However, you will need:

Python 3.

A Pinecone account (sign up for the Starter plan for free).

An OpenAI API Key (paid, requires a credit card); new users can experiment with $5 in free credit during the first 3 months.

But ChatGPT Can't Learn, Can It?

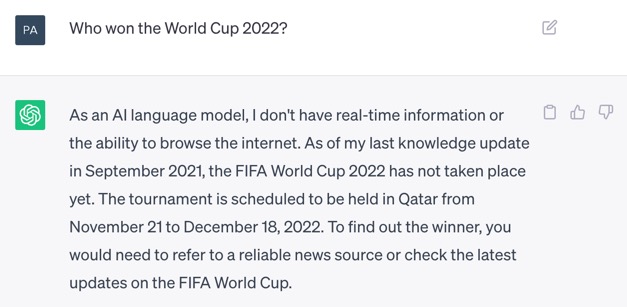

ChatGPT, or more accurately, GPT-3 and GPT-4, the Large Language Models (LLMs) powering them, have been trained on a massive dataset with a cutoff date around September 2021.

In essence, GPT-3 knows very little about events beyond that date. We can verify this with a simple prompt:

While some OpenAI models can undergo fine-tuning, the more advanced models, such as the ones were interested in, cannot; we cannot augment their training data.

How can we get answers from GPT-3 beyond its training data? One method involves exploiting its text comprehension abilities; by enhancing the prompt with relevant context, we can likely obtain the correct answer.

In the example below, I provide context from FIFA's official site, and the response differs significantly:

We can deduce that the model can respond to any prompt if given enough relevant context. The question remains: how can we know what's relevant given an arbitrary prompt? To address this, we need to explore what word embeddings are.

What Are Word Embeddings?

In the context of language models, an embedding is a way of representing words, sentences, or entire documents as vectors or lists of numbers.

To calculate embeddings, we will need a neural network such as word2vec or text-embedding-ada-002. These networks have been trained on massive amounts of text and can find relationships between words by analyzing the frequencies with which specific patterns appear in the training data.

Let’s say we have the following words:

Cat

Dog

Ball

House

Imagine we use one of these embedding networks to calculate the vectors for each word. For example:

| Word | Vector | Context |

|---|---|---|

| Cat | [0.1, 0.2, 0.3, 0.4, 0.5] | Animals, objects, small things |

| Dog | [0.6, 0.7, 0.8, 0.9, 1.0] | Animals, objects, large things |

| Ball | [0.2, 0.4, 0.6, 0.8, 1.0] | Objects, toys, small things |

| House | [0.3, 0.6, 0.9, 1.2, 1.5] | Buildings, homes, large things |

Once we have the vectors for each word, we can use them to represent the meaning of the text. For example, the sentence “The cat chased the ball” can be represented as the vector [0.1, 0.2, 0.3, 0.4, 0.5] + [0.2, 0.4, 0.6, 0.8, 1.0] = [0.3, 0.6, 0.9, 1.2, 1.5]. This vector represents a sentence that is about an animal chasing an object.

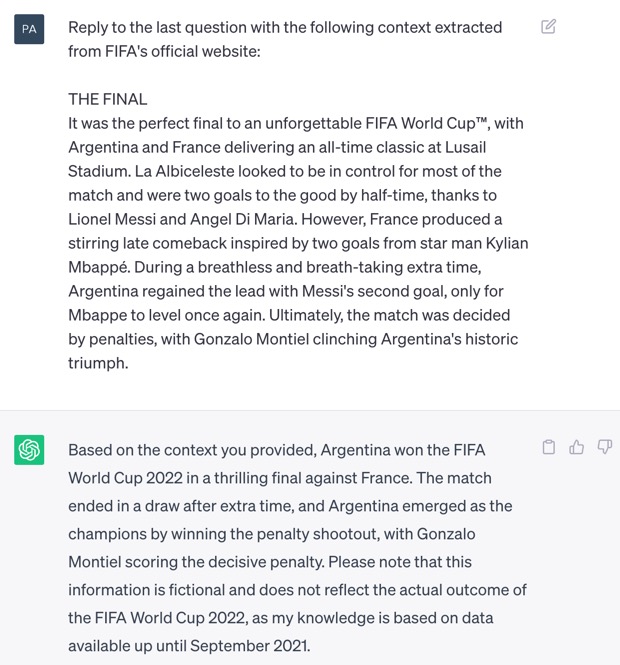

Word embeddings can be visualized as multidimensional spaces where words or sentences with similar meanings are close together. We can compute the "distance" between vectors to find similar meanings for any input text.

3D representation of embeddings as vector spaces. In reality, these spaces can have hundreds or thousands of dimensions. Source: Meet AI’s Multitool: Vector Embeddings

3D representation of embeddings as vector spaces. In reality, these spaces can have hundreds or thousands of dimensions. Source: Meet AI’s Multitool: Vector Embeddings

The actual mathematics behind all this is beyond the scope of this article. However, the key takeaway is that vector operations allow us to manipulate or determine meaning using mathematics. Take the vector that represents the word “queen,” subtract the “woman” vector from it, and add the “man” vector. The result should be a vector in the vicinity of “king.” If we add “son,” we should get somewhere close to “prince.”

Embedding Neural Networks With Tokens

So far, we have discussed embedding neural networks taking words as inputs and numbers as outputs. However, many modern networks have moved from processing words to processing tokens.

A token is the smallest unit of text that can be processed by the model. Tokens can be words, characters, punctuation marks, symbols, or parts of words.

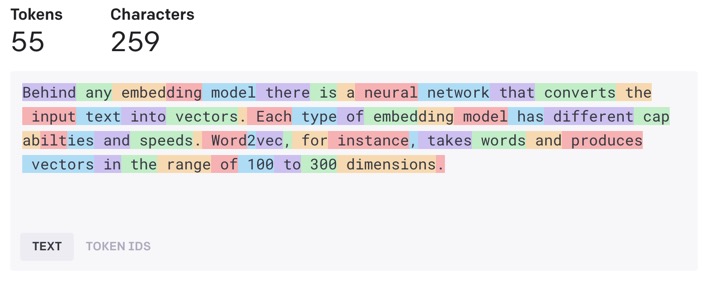

We can see how words are converted to tokens by experimenting with the OpenAI online tokenizer, which uses Byte-Pair Encoding (BPE) to convert text to tokens and represent each one with a number:

There is often a 1-to-1 relationship between tokens and words. Most tokens include the word and a leading space. However, there are special cases like "embedding," which consists of two tokens, "embed" and "ding," or "capabilities," which consists of four tokens. If you click "Token IDs," you can see the model's numeric representation of each token.

There is often a 1-to-1 relationship between tokens and words. Most tokens include the word and a leading space. However, there are special cases like "embedding," which consists of two tokens, "embed" and "ding," or "capabilities," which consists of four tokens. If you click "Token IDs," you can see the model's numeric representation of each token.

Designing a Smarter Bot Using Embeddings

Now that we have an understanding of what embeddings are, the next question is: How can they help us build a smarter bot?

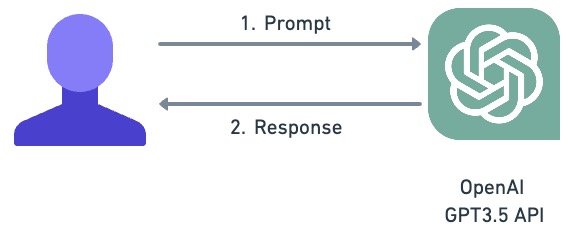

First, let's consider what happens when we use the GPT-3 API directly. The user issues a prompt, and the model responds to the best of its ability.

However, when we add context to the equation, things change. For example, when I asked ChatGPT about the winner of the World Cup after providing context, it made all the difference.

However, when we add context to the equation, things change. For example, when I asked ChatGPT about the winner of the World Cup after providing context, it made all the difference.

So, the plan to build a smarter bot is as follows:

Intercept the user's prompt.

Calculate the embeddings for that prompt, yielding a vector.

Search a database for documents near the vector, as they should be semantically relevant to the initial prompt.

Send the original prompt to GPT-3, along with any relevant context.

Forward GPT-3's response to the user.

Let's begin like most projects, by designing the database.

Creating a Knowledge Database With Embeddings

Our context database must include the original documentation and their respective vectors. In principle, we can employ any type of database for this task, but a vector database is the optimal tool for the job.

Vector databases are specialized databases designed to store and retrieve high-dimensional vector data. Instead of employing a query language such as SQL for searching, we supply a vector and request the N closest neighbors.

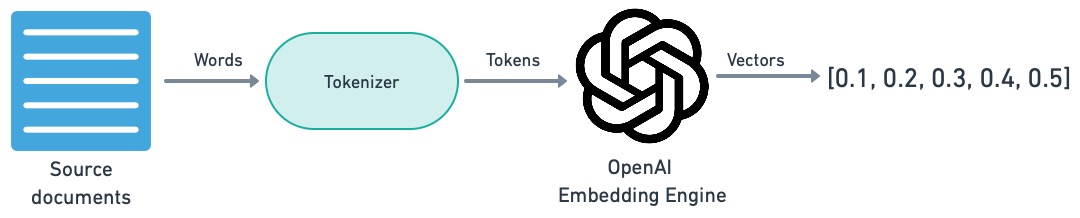

To generate the vectors, we will use text-embedding-ada-002 from OpenAI, as it is the fastest and most cost-effective model they offer. The model converts the input text into tokens and uses an attention mechanism known as Transformer to learn their relationships. The output of this neural network is vectors representing the meaning of the text.

To create a context database, I will:

Collect all the source documentation.

Filter out irrelevant documents.

Calculate the embeddings for each document.

Store the vectors, original text, and any other relevant metadata in the database.

Converting Documents Into Vectors

First, I must initialize an environment file with the OpenAI API key. This file should never be committed to version control, as the API key is private and tied to your account.

export OPENAI_API_KEY=YOUR_API_KEY

Next, I'll create a virtualenv for my Python application:

$ virtualenv venv

$ source venv/bin/activate

$ source .env

And install the OpenAI package:

```bash

$ pip install openai numpy

Let's try calculating the embedding for the string "Docker Container". You can run this on the Python REPL or as a Python script:

$ python

>>> import openai

>>> embeddings = openai.Embedding.create(input="Docker Containers", engine="text-embedding-ada-002")

>>> embeddings

JSON: {

"data": [

{

"embedding": [

-0.00530336843803525,

0.0013223182177171111,

... 1533 more items ...,

-0.015645816922187805

],

"index": 0,

"object": "embedding"

}

],

"model": "text-embedding-ada-002-v2",

"object": "list",

"usage": {

"prompt_tokens": 2,

"total_tokens": 2

}

}

As you can see, OpenAI's model responds with an embedding list containing 1536 items — the vector size for text-embedding-ada-002.

Storing the Embeddings in Pinecone

While there are multiple vector database engines to choose from, like Chroma, which is open-source, I chose Pinecone because its a managed database with a free tier, which makes things simpler. Their Starter plan is more than capable of handling all the data I will need.

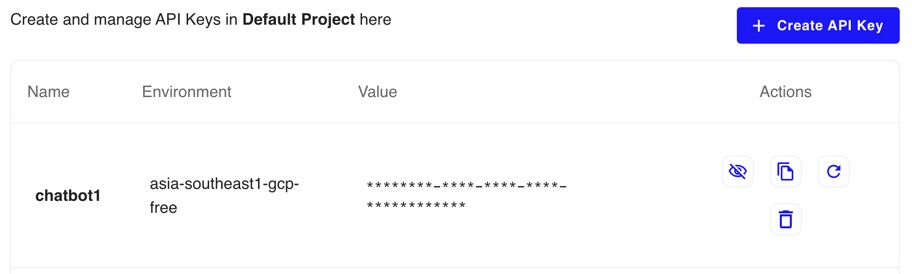

After creating my Pinecone account and retrieving my API key and environment, I add both values to my .env file.

Now .env should contain my Pinecone and OpenAI secrets.

export OPENAI_API_KEY=YOUR_API_KEY

# Pinecone secrets

export PINECONE_API_KEY=YOUR_API_KEY

export PINECONE_ENVIRONMENT=YOUR_PINECONE_DATACENTER

Then, I install the Pinecone client for Python:

$ pip install pinecone-client

I need to initialize a database; these are the contents of the db_create.py script:

# db_create.py

import pinecone

import openai

import os

index_name = "semaphore"

embed_model = "text-embedding-ada-002"

api_key = os.getenv("PINECONE_API_KEY")

env = os.getenv("PINECONE_ENVIRONMENT")

pinecone.init(api_key=api_key, environment=env)

embedding = openai.Embedding.create(

input=[

"Sample document text goes here",

"there will be several phrases in each batch"

], engine=embed_model

)

if index_name not in pinecone.list_indexes():

print("Creating pinecone index: " + index_name)

pinecone.create_index(

index_name,

dimension=len(embedding['data'][0]['embedding']),

metric='cosine',

metadata_config={'indexed': ['source', 'id']}

)

The script can take a few minutes to create the database.

$ python db_create.py

Next, I will install the tiktoken package. I'll use it to calculate how many tokens the source documents have. This is important because the embedding model can only handle up to 8191 tokens.

$ pip install tiktoken

While installing packages, let's also install tqdm to produce a nice-looking progress bar.

$ pip install tqdm

Now I need to upload the documents to the database. The script for this will be called index_docs.py. Let's start by importing the required modules and defining some constants:

# index_docs.py

# Pinecone db name and upload batch size

index_name = 'semaphore'

upsert_batch_size = 20

# OpenAI embedding and tokenizer models

embed_model = "text-embedding-ada-002"

encoding_model = "cl100k_base"

max_tokens_model = 8191

Next, we'll need a function to count tokens. There is a token counter example on OpenAI page:

import tiktoken

def num_tokens_from_string(string: str) -> int:

"""Returns the number of tokens in a text string."""

encoding = tiktoken.get_encoding(encoding_model)

num_tokens = len(encoding.encode(string))

return num_tokens

Finally, I'll need some filtering functions to convert the original document into usable examples. Most examples in the documentation are between code fences, so I'll just extract all YAML code from every file:

import re

def extract_yaml(text: str) -> str:

"""Returns list with all the YAML code blocks found in text."""

matches = [m.group(1) for m in re.finditer("```yaml([\w\W]*?)```", text)]

return matches

I'm done with the functions. Next, this will load the files in memory and extract the examples:

from tqdm import tqdm

import sys

import os

import pathlib

repo_path = sys.argv[1]

repo_path = os.path.abspath(repo_path)

repo = pathlib.Path(repo_path)

markdown_files = list(repo.glob("**/*.md")) + list(

repo.glob("**/*.mdx")

)

print(f"Extracting YAML from Markdown files in {repo_path}")

new_data = []

for i in tqdm(range(0, len(markdown_files))):

markdown_file = markdown_files[i]

with open(markdown_file, "r") as f:

relative_path = markdown_file.relative_to(repo_path)

text = str(f.read())

if text == '':

continue

yamls = extract_yaml(text)

j = 0

for y in yamls:

j = j+1

new_data.append({

"source": str(relative_path),

"text": y,

"id": f"github.com/semaphore/docs/{relative_path}[{j}]"

})

At this point, all the YAMLs should be stored in the new_data list. The final step is to upload the embeddings into Pinecone.

import pinecone

import openai

api_key = os.getenv("PINECONE_API_KEY")

env = os.getenv("PINECONE_ENVIRONMENT")

pinecone.init(api_key=api_key, enviroment=env)

index = pinecone.Index(index_name)

print(f"Creating embeddings and uploading vectors to database")

for i in tqdm(range(0, len(new_data), upsert_batch_size)):

i_end = min(len(new_data), i+upsert_batch_size)

meta_batch = new_data[i:i_end]

ids_batch = [x['id'] for x in meta_batch]

texts = [x['text'] for x in meta_batch]

embedding = openai.Embedding.create(input=texts, engine=embed_model)

embeds = [record['embedding'] for record in embedding['data']]

# clean metadata before upserting

meta_batch = [{

'id': x['id'],

'text': x['text'],

'source': x['source']

} for x in meta_batch]

to_upsert = list(zip(ids_batch, embeds, meta_batch))

index.upsert(vectors=to_upsert)

As a reference, you can find the full index_docs.py file in the demo repository.

Let's run the index script to finish with the database setup:

$ git clone https://github.com/semaphoreci/docs.git /tmp/docs

$ source .env

$ python index_docs.py /tmp/docs

Testing the Database

The Pinecone dashboard should show vectors in the database.

We can query the database with the following code, which you can run as a script or in the Python REPL directly:

$ python

>>> import os

>>> import pinecone

>>> import openai

# Compute embeddings for string "Docker Container"

>>> embeddings = openai.Embedding.create(input="Docker Containers", engine="text-embedding-ada-002")

# Connect to database

>>> index_name = "semaphore"

>>> api_key = os.getenv("PINECONE_API_KEY")

>>> env = os.getenv("PINECONE_ENVIRONMENT")

>>> pinecone.init(api_key=api_key, environment=env)

>>> index = pinecone.Index(index_name)

# Query database

>>> matches = index.query(embeddings['data'][0]['embedding'], top_k=1, include_metadata=True)

>>> matches['matches'][0]

{'id': 'github.com/semaphore/docs/docs/ci-cd-environment/docker-authentication.md[3]',

'metadata': {'id': 'github.com/semaphore/docs/docs/ci-cd-environment/docker-authentication.md[3]',

'source': 'docs/ci-cd-environment/docker-authentication.md',

'text': '\n'

'# .semaphore/semaphore.yml\n'

'version: v1.0\n'

'name: Using a Docker image\n'

'agent:\n'

' machine:\n'

' type: e1-standard-2\n'

' os_image: ubuntu1804\n'

'\n'

'blocks:\n'

' - name: Run container from Docker Hub\n'

' task:\n'

' jobs:\n'

' - name: Authenticate docker pull\n'

' commands:\n'

' - checkout\n'

' - echo $DOCKERHUB_PASSWORD | docker login '

'--username "$DOCKERHUB_USERNAME" --password-stdin\n'

' - docker pull /\n'

' - docker images\n'

' - docker run /\n'

' secrets:\n'

' - name: docker-hub\n'},

'score': 0.796259582,

'values': []}

As you can see, the first match is the YAML for a Semaphore pipeline that pulls a Docker image and runs it. It's a good start since it's relevant to our "Docker Containers" search string.

Building the Bot

We have the data, and we know how to query it. Let's put it to work in the bot.

The steps for processing the prompt is:

Take the user's prompt.

Calculate its vector.

Retrieve relevant context from the database.

Send the user's prompt along with context to GPT-3.

Forward the model's response to the user.

As usual, I'll start by defining some constants in complete.py, the bot's main script:

# complete.py

# Pinecone database name, number of matched to retrieve

# cutoff similarity score, and how much tokens as context

index_name = 'semaphore'

context_cap_per_query = 30

match_min_score = 0.75

context_tokens_per_query = 3000

# OpenAI LLM model parameters

chat_engine_model = "gpt-3.5-turbo"

max_tokens_model = 4096

temperature = 0.2

embed_model = "text-embedding-ada-002"

encoding_model_messages = "gpt-3.5-turbo-0301"

encoding_model_strings = "cl100k_base"

import pinecone

import os

# Connect with Pinecone db and index

api_key = os.getenv("PINECONE_API_KEY")

env = os.getenv("PINECONE_ENVIRONMENT")

pinecone.init(api_key=api_key, environment=env)

index = pinecone.Index(index_name)

Next, I'll add functions to count tokens as shown in the OpenAI examples. The first function counts tokens in a string, while the second counts tokens in messages. We'll see messages in detail in a bit. For now, let's just say it's a structure that keeps the state of the conversation in memory.

import tiktoken

def num_tokens_from_string(string: str) -> int:

"""Returns the number of tokens in a text string."""

encoding = tiktoken.get_encoding(encoding_model_strings)

num_tokens = len(encoding.encode(string))

return num_tokens

def num_tokens_from_messages(messages):

"""Returns the number of tokens used by a list of messages. Compatible with model """

try:

encoding = tiktoken.encoding_for_model(encoding_model_messages)

except KeyError:

encoding = tiktoken.get_encoding(encoding_model_strings)

num_tokens = 0

for message in messages:

num_tokens += 4 # every message follows {role/name}\n{content}\n

for key, value in message.items():

num_tokens += len(encoding.encode(value))

if key == "name": # if there's a name, the role is omitted

num_tokens += -1 # role is always required and always 1 token

num_tokens += 2 # every reply is primed with assistant

return num_tokens

The following function takes the original prompt and context strings to return an enriched prompt for GPT-3:

def get_prompt(query: str, context: str) -> str:

"""Return the prompt with query and context."""

return (

f"Create the continuous integration pipeline YAML code to fullfil the requested task.\n" +

f"Below you will find some context that may help. Ignore it if it seems irrelevant.\n\n" +

f"Context:\n{context}" +

f"\n\nTask: {query}\n\nYAML Code:"

)

The get_message function formats the prompt in a format compatible with API:

def get_message(role: str, content: str) -> dict:

"""Generate a message for OpenAI API completion."""

return {"role": role, "content": content}

There are three types of roles that affect how the model reacts:

User: for the user's original prompt.

System: helps set the behavior of the assistant. While there is some controversy regarding its effectiveness, it appears to be more effective when sent at the end of the messages list.

Assistant: represents past responses of the model. The OpenAI API does not have a "memory"; instead, we must send the model's previous responses back during each interaction to maintain the conversation.

Now for the engaging part. The get_context function takes the prompt, queries the database, and generates a context string until one of these conditions is met:

The complete text exceeds

context_tokens_per_query, the space I reserved for context.The search function retrieves all requested matches.

Matches that have a similarity score below

match_min_scoreare ignored.

import openai

def get_context(query: str, max_tokens: int) -> list:

"""Generate message for OpenAI model. Add context until hitting `context_token_limit` limit. Returns prompt string."""

embeddings = openai.Embedding.create(

input=[query],

engine=embed_model

)

# search the database

vectors = embeddings['data'][0]['embedding']

embeddings = index.query(vectors, top_k=context_cap_per_query, include_metadata=True)

matches = embeddings['matches']

# filter and aggregate context

usable_context = ""

context_count = 0

for i in range(0, len(matches)):

source = matches[i]['metadata']['source']

if matches[i]['score'] < match_min_score:

# skip context with low similarity score

continue

context = matches[i]['metadata']['text']

token_count = num_tokens_from_string(usable_context + '\n---\n' + context)

if token_count < context_tokens_per_query:

usable_context = usable_context + '\n---\n' + context

context_count = context_count + 1

print(f"Found {context_count} contexts for your query")

return usable_context

The next and final function, complete, issues the API request to OpenAI and returns the model's response.

def complete(messages):

"""Query the OpenAI model. Returns the first answer. """

res = openai.ChatCompletion.create(

model=chat_engine_model,

messages=messages,

temperature=temperature

)

return res.choices[0].message.content.strip()

That's all; now I only have to deal with the command line arguments and call the functions in the correct order:

import sys

query = sys.argv[1]

context = get_context(query, context_tokens_per_query)

prompt = get_prompt(query, context)

# initialize messages list to send to OpenAI API

messages = []

messages.append(get_message('user', prompt))

messages.append(get_message('system', 'You are a helpful assistant that writes YAML code for Semaphore continuous integration pipelines and explains them. Return YAML code inside code fences.'))

if num_tokens_from_messages(messages) >= max_tokens_model:

raise Exception('Model token size limit reached')

print("Working on your query... ")

answer = complete(messages)

print("Answer:\n")

print(answer)

messages.append(get_message('assistant', answer))

It's time to run the script and see how it fares:

$ python complete.py "Create a CI pipeline that builds and uploads a Docker image to Docker Hub"

The result is:

version: v1.0

name: Docker Build and Push

agent:

machine:

type: e1-standard-2

os_image: ubuntu1804

blocks:

- name: "Build and Push Docker Image"

task:

jobs:

- name: "Docker Build and Push"

commands:

- checkout

- docker build -t /: .

- echo "$DOCKERHUB_PASSWORD" | docker login -u "$DOCKERHUB_USERNAME" --password-stdin

- docker push /:

promotions:

- name: Deploy to production

pipeline_file: deploy-production.yml

auto_promote:

when: "result = 'passed' and branch = 'master'"

This is the first good result. The model has inferred the syntax from the context examples we provided.

Thoughts on Expanding the Bot's Capabilities

Remember that I started with a modest goal: creating an assistant to write YAML pipelines. With richer content in my vector database, I can generalize the bot to answer any question about Semaphore (or any product — remember cloning the docs into /tmp?).

The key to obtaining good answers is — unsurprisingly — quality context. Merely uploading every document into the vector database is unlikely to yield good results. The context database should be curated, tagged with descriptive metadata, and be concise. Otherwise, we risk filling the token quota in the prompt with irrelevant context.

So, in a sense, there is an art — and a great deal of trial and error — involved in fine-tuning the bot to meet our needs. We can experiment with the context limit, remove low-quality content, summarize, and filter out irrelevant context by adjusting the similarity score.

Implementing a Proper Chatbot

You may have noticed that my bot does not enable us to have actual conversation like ChatGPT. We ask one question and get one answer.

Converting the bot into a fully-fledged chatbot is, in principle, not too challenging. We can maintain the conversation by resending previous responses to the model with each API request. Prior GPT-3 answers are sent back under the "assistant" role. For example:

messages = []

while True:

query = input('Type your prompt:\n')

context = get_context(query, context_tokens_per_query)

prompt = get_prompt(query, context)

messages.append(get_message('user', prompt))

messages.append(get_message('system', 'You are a helpful assistant that writes YAML code for Semaphore continuous integration pipelines and explains them. Return YAML code inside code fences.'))

if num_tokens_from_messages(messages) >= max_tokens_model:

raise Exception('Model token size limit reached')

print("Working on your query... ")

answer = complete(messages)

print("Answer:\n")

print(answer)

# remove system message and append model's answer

messages.pop()

messages.append(get_message('assistant', answer))

Unfortunately, this implementation is rather rudimentary. It will not support extended conversations as the token count increases with each interaction. Soon enough, we will reach the 4096-token limit for GPT-3, preventing further dialogue.

So, we have to find some way of keeping the request within token limits. A few strategies follow:

Delete older messages. While this is the simplest solution, it limits the conversation's "memory" to only the most recent messages.

Summarize previous messages. We can utilize "Ask the model" to condense earlier messages and substitute them for the original questions and answers. Though this approach increases the cost and lag between queries, it may produce superior outcomes compared to simply deleting past messages.

Set a strict limit on the number of interactions.

Wait for the GPT-4 API general availability, which is not only smarter but has double token capacity.

Use a newer model like "gpt-3.5-turbo-16k" which can handle up to 16k tokens.

Conclusion

Enhancing the bot's responses is possible with word embeddings and a good context databse. To achieve this, we need good quality documentation. There is a substantial amount of trial and error involved in developing a bot that seemingly possesses a grasp of the subject matter.

I hope this in-depth exploration of word embeddings and large language models aids you in building a more potent bot, customized to your requirements.

Happy building!

Published at DZone with permission of Tomas Fernandez. See the original article here.

Opinions expressed by DZone contributors are their own.

Comments